Questioning why a few of your pages don’t present up in Google’s search outcomes?

Crawlability issues might be the culprits.

On this information, we’ll cowl what crawlability issues are, how they have an effect on search engine optimisation, and the best way to repair them.

Let’s get began.

What Are Crawlability Issues?

Crawlability issues are points that forestall search engines like google and yahoo from accessing your web site’s pages.

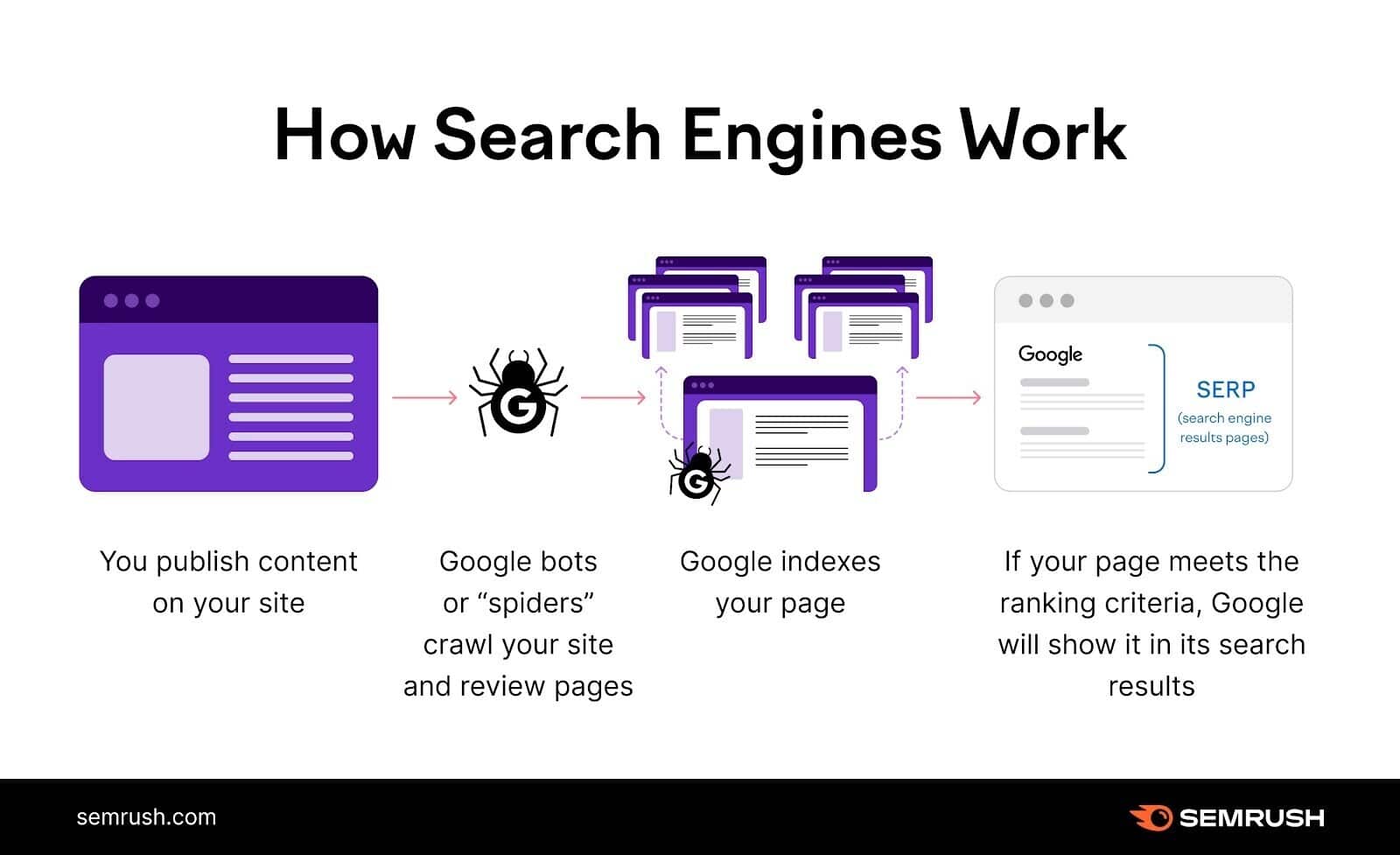

Engines like google like Google use automated bots to learn and analyze your pages—that is known as crawling.

However these bots might encounter obstacles that hinder their skill to correctly entry your pages if there are crawlability issues.

Widespread crawlability issues embody:

- Nofollow hyperlinks (which inform Google to not observe the hyperlink or cross rating energy to that web page)

- Redirect loops (when two pages redirect to one another to create an infinite loop)

- Unhealthy web site construction

- Gradual web site velocity

How Do Crawlability Points Have an effect on search engine optimisation?

Crawlability issues can drastically have an effect on your search engine optimisation recreation.

Why?

As a result of crawlability issues make it in order that some (or all) of your pages are virtually invisible to search engines like google and yahoo.

They’ll’t discover them. Which suggests they will’t index them—i.e., save them in a database to show in related search outcomes.

This implies a possible lack of search engine (natural) visitors and conversions.

Your pages have to be each crawlable and indexable to rank in search engines like google and yahoo.

15 Crawlability Issues & Repair Them

1. Pages Blocked In Robots.txt

Engines like google first take a look at your robots.txt file. This tells them which pages they need to and shouldn’t crawl.

In case your robots.txt file seems like this, it means your total web site is blocked from crawling:

Consumer-agent: *

Disallow: /

Fixing this drawback is straightforward. Simply exchange the “disallow” directive with “permit.” Which ought to allow search engines like google and yahoo to entry your total web site.

Like this:

Consumer-agent: *

Permit: /

In different circumstances, solely sure pages or sections are blocked. For example:

Consumer-agent: *

Disallow: /merchandise/

Right here, all of the pages within the “merchandise” subfolder are blocked from crawling.

Remedy this drawback by eradicating the subfolder or web page specified—search engines like google and yahoo ignore the empty “disallow” directive.

Consumer-agent: *

Disallow:

Or you may use the “permit” directive as a substitute of “disallow” to instruct search engines like google and yahoo to crawl your total web site like we did earlier.

Word

It’s widespread follow to dam pages like admin and “thanks” pages that you do not wish to be crawled or proven in search outcomes along with your robots.txt file. It’s solely a crawlability drawback whenever you block pages meant to be seen in search outcomes.

2. Nofollow Hyperlinks

The nofollow tag tells search engines like google and yahoo to not crawl the hyperlinks on a webpage.

And the tag seems like this:

<meta title="robots" content material="nofollow">

If this tag is current in your pages, the opposite pages that they hyperlink to may not get crawled. Which creates crawlability issues in your web site.

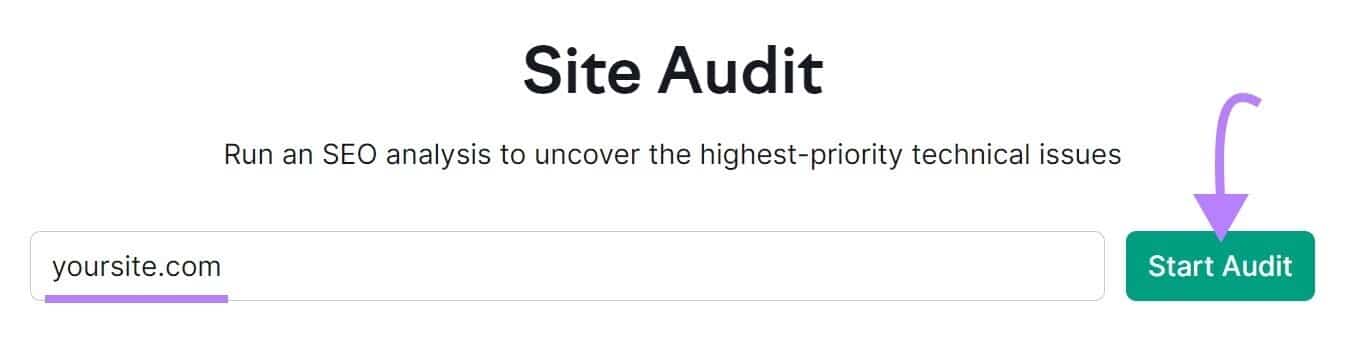

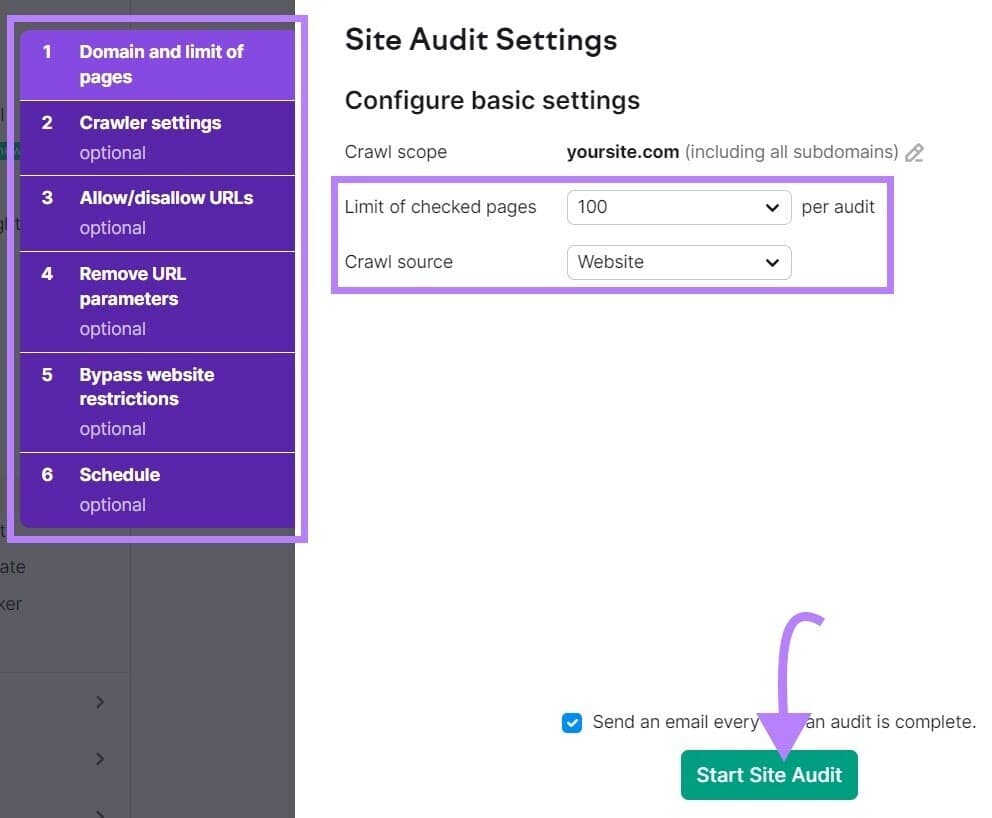

Test for nofollow hyperlinks like this with Semrush’s Website Audit instrument.

Open the instrument, enter your web site, and click on “Begin Audit.”

The “Website Audit Settings” window will seem.

From right here, configure the essential settings and click on “Begin Website Audit.”

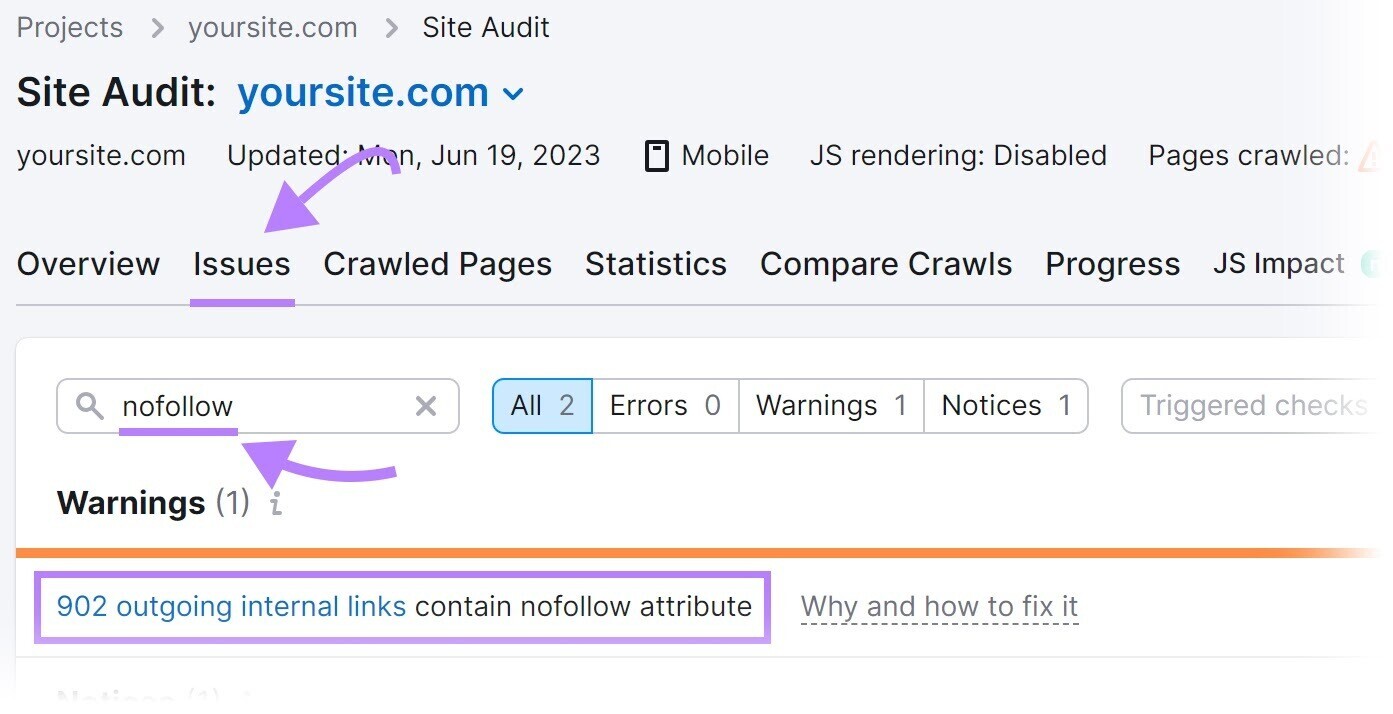

As soon as the audit is full, navigate to the “Points” tab and seek for “nofollow.”

If nofollow hyperlinks are detected, click on “# outgoing inside hyperlinks include nofollow attribute” to view a listing of pages which have a nofollow tag.

Overview the pages and take away the nofollow tags in the event that they shouldn’t be there.

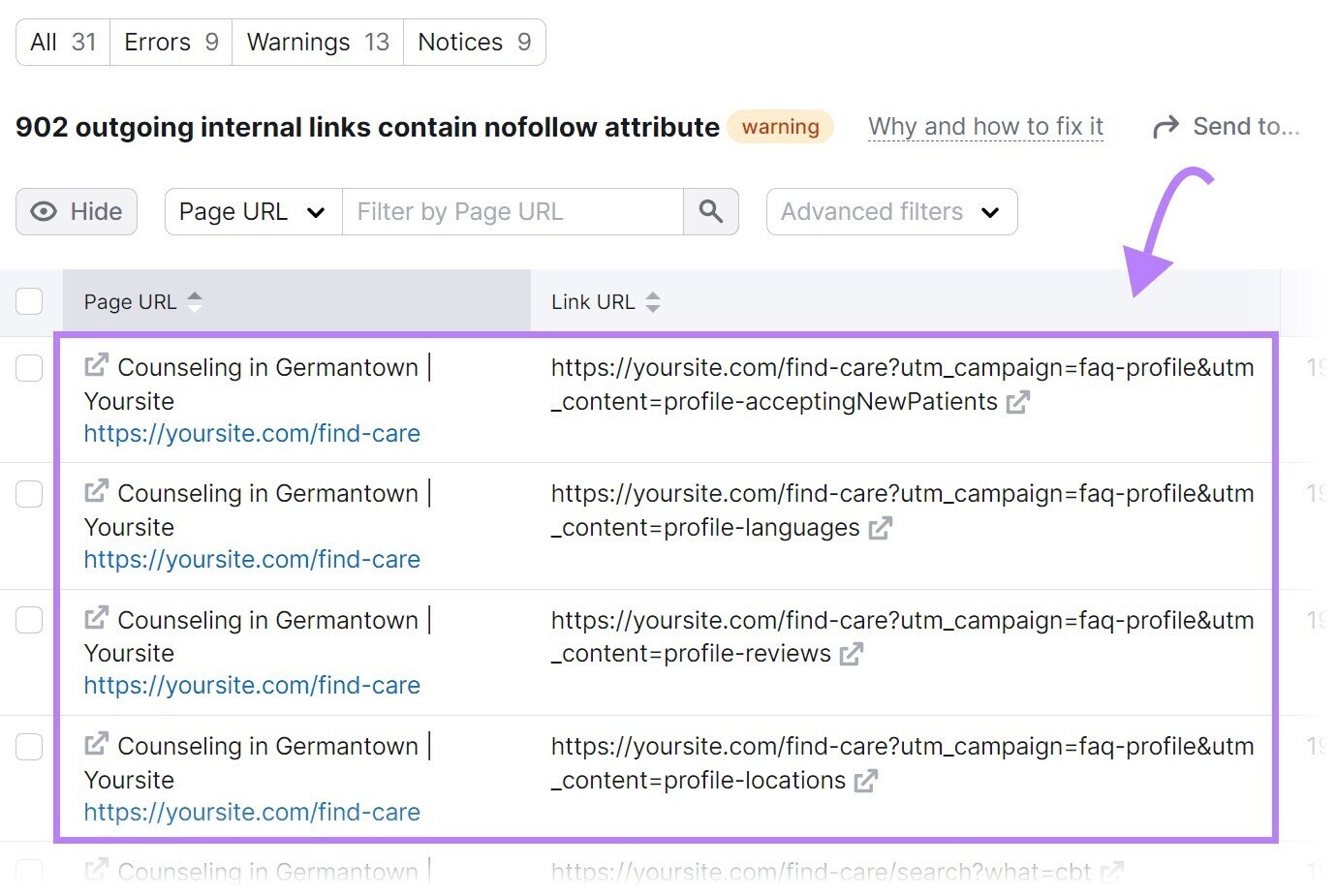

3. Unhealthy Website Structure

Website structure is how your pages are organized throughout your web site.

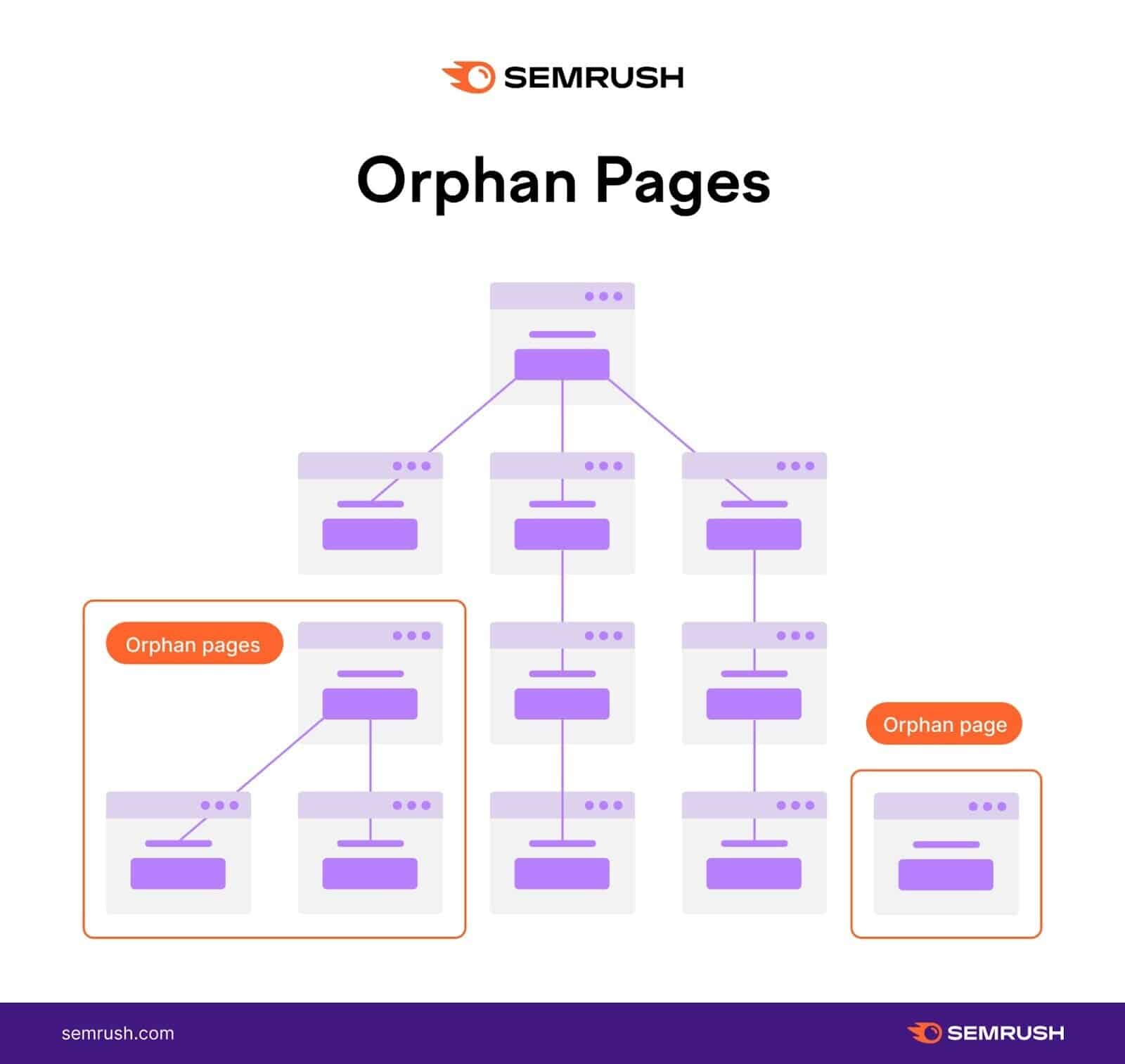

An excellent web site structure ensures each web page is just some clicks away from the homepage—and that there are not any orphan pages (i.e., pages with no inside hyperlinks pointing to them). To assist search engines like google and yahoo simply entry all pages.

However a foul web site web site structure can create crawlability points.

Discover the instance web site construction depicted under. It has orphan pages.

As a result of there’s no linked path to them from the homepage, they might go unnoticed when search engines like google and yahoo crawl the positioning.

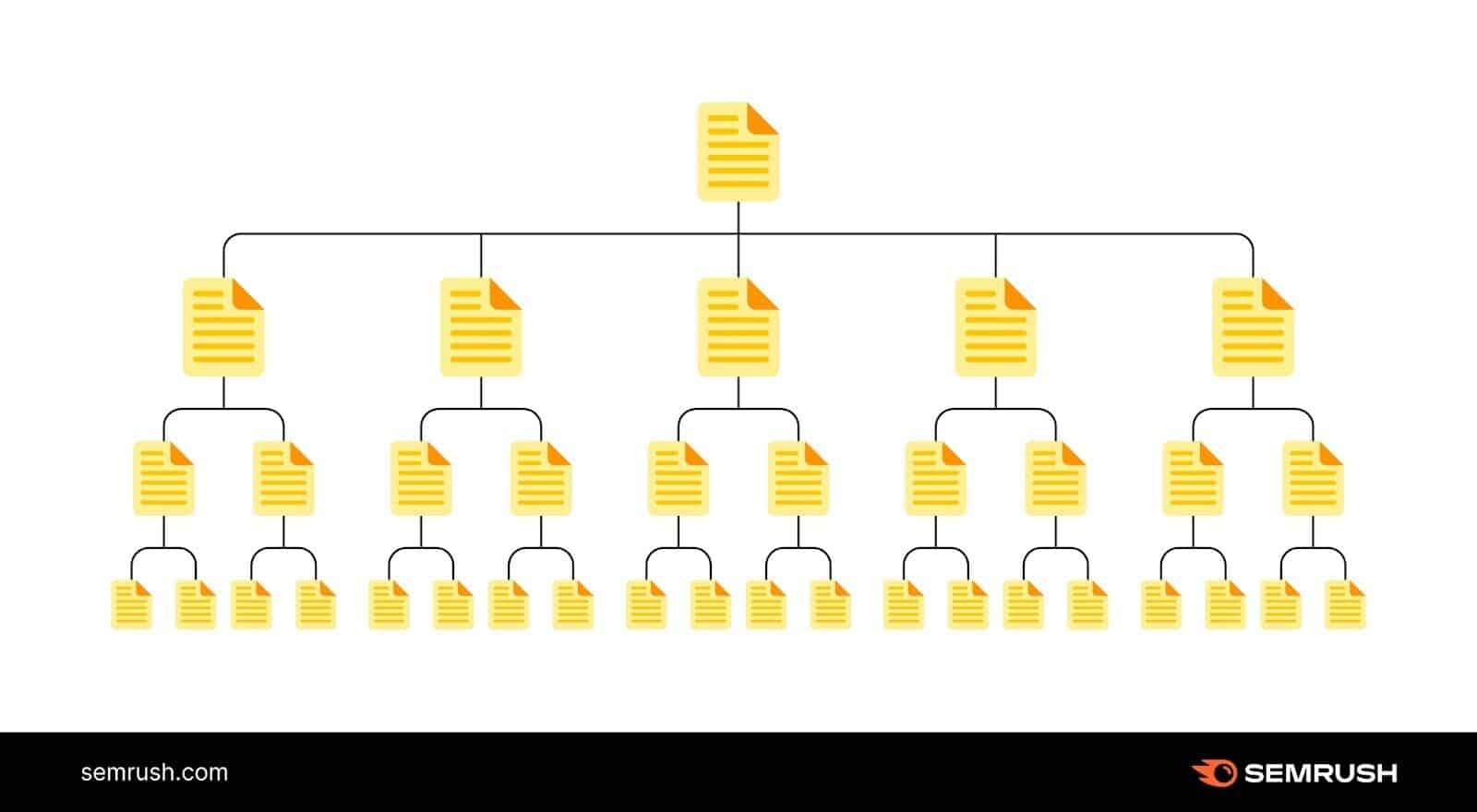

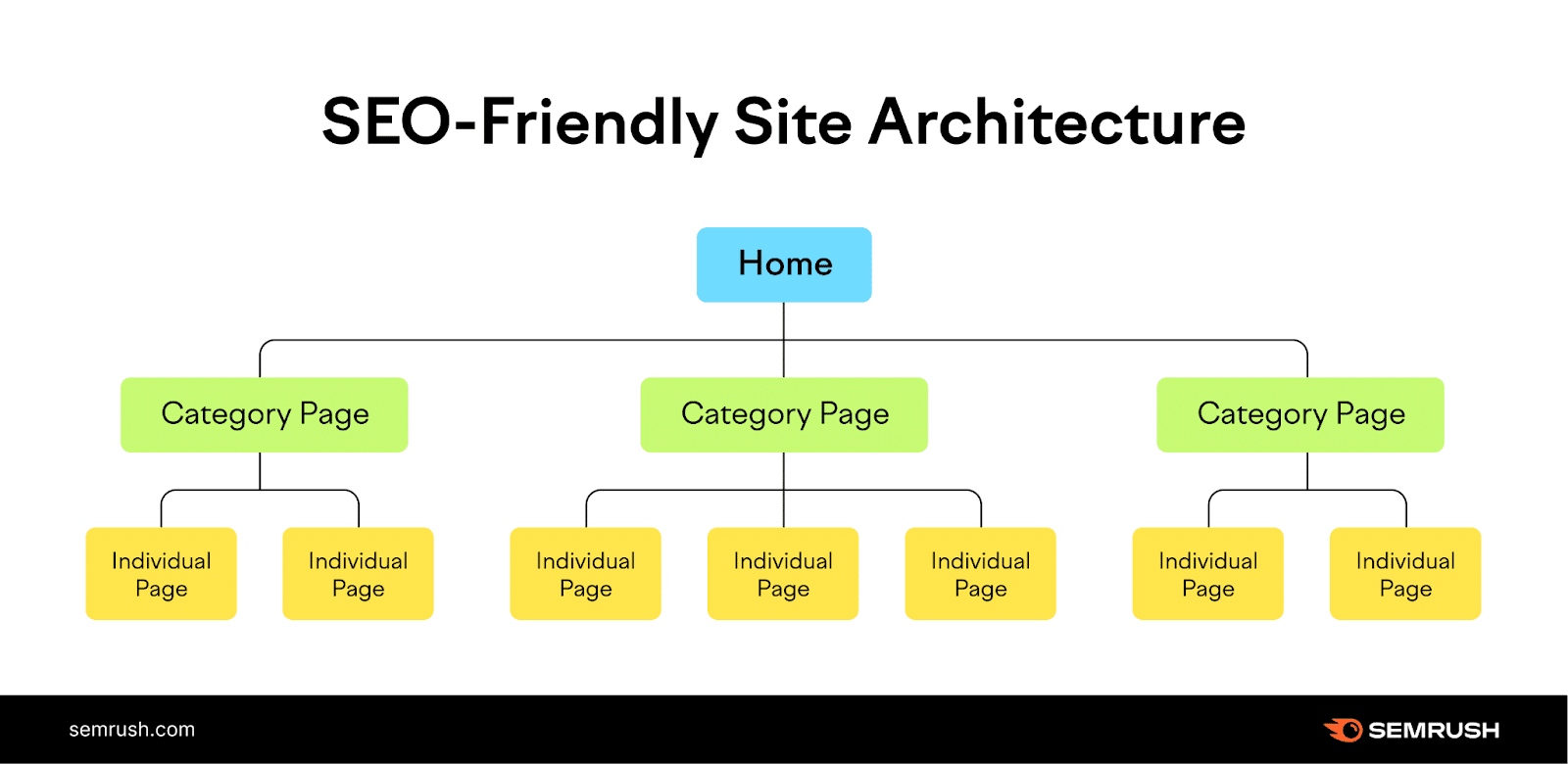

The answer is easy: Create a web site construction that logically organizes your pages in a hierarchy by way of inside hyperlinks.

Like this:

Within the instance above, the homepage hyperlinks to class pages, which then hyperlink to particular person pages in your web site.

And this supplies a transparent path for crawlers to search out all of your essential pages.

4. Lack of Inside Hyperlinks

Pages with out inside hyperlinks can create crawlability issues.

Engines like google may have bother discovering these pages.

So, determine your orphan pages. And add inside hyperlinks to them to keep away from crawlability points.

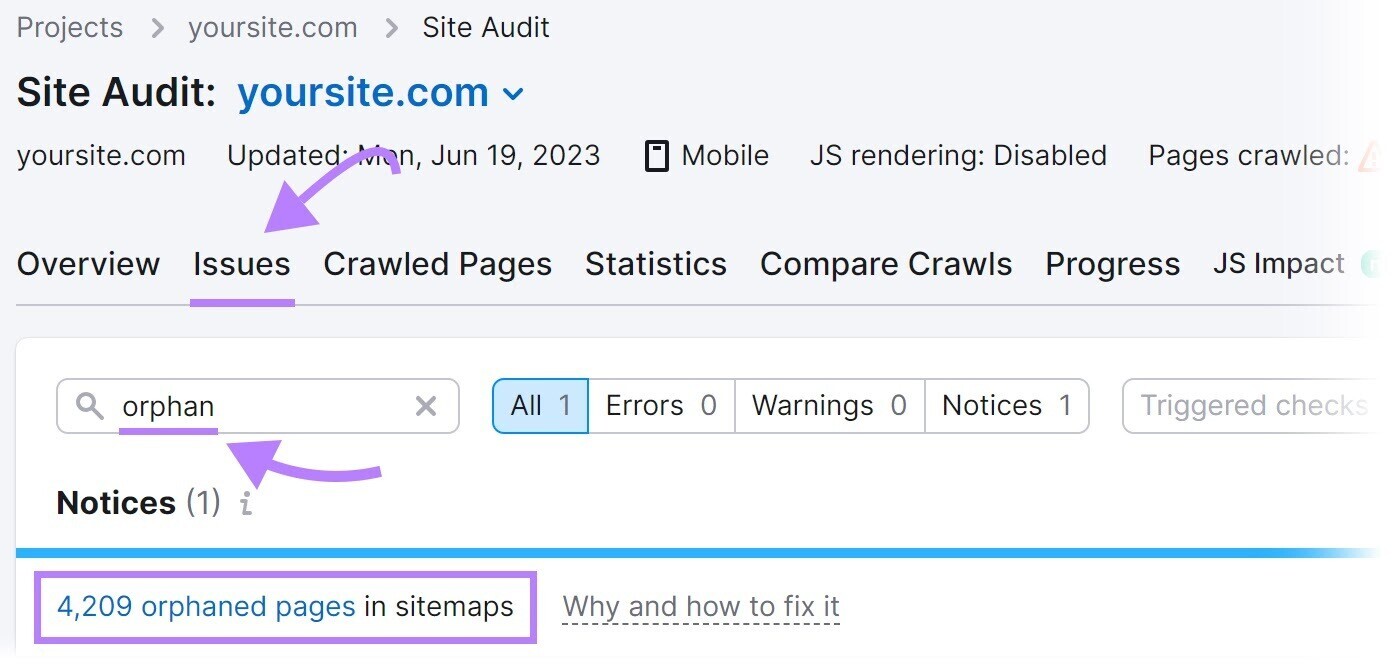

Discover orphan pages utilizing Semrush’s Website Audit instrument.

Configure the instrument to run your first audit.

Then, go to the “Points” tab and seek for “orphan.”

You’ll see whether or not there are any orphan pages current in your web site.

To resolve this drawback, add inside hyperlinks to orphan pages from different related pages in your web site.

5. Unhealthy Sitemap Administration

A sitemap supplies a listing of pages in your web site that you really want search engines like google and yahoo to crawl, index, and rank.

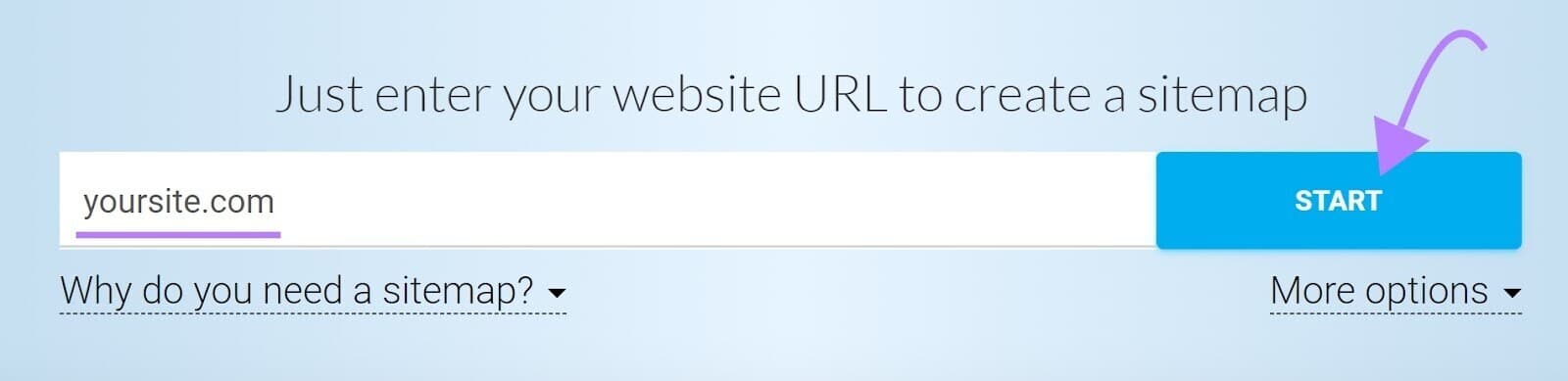

In case your sitemap excludes any pages you wish to be discovered, they could go unnoticed. And create crawlability points. A instrument equivalent to XML Sitemaps Generator might help you embody all pages meant to be crawled.

Enter your web site URL, and the instrument will generate a sitemap for you robotically.

Then, save the file as “sitemap.xml” and add it to the basis listing of your web site.

For instance, in case your web site is www.instance.com, then your sitemap URL ought to be accessed at www.instance.com/sitemap.xml.

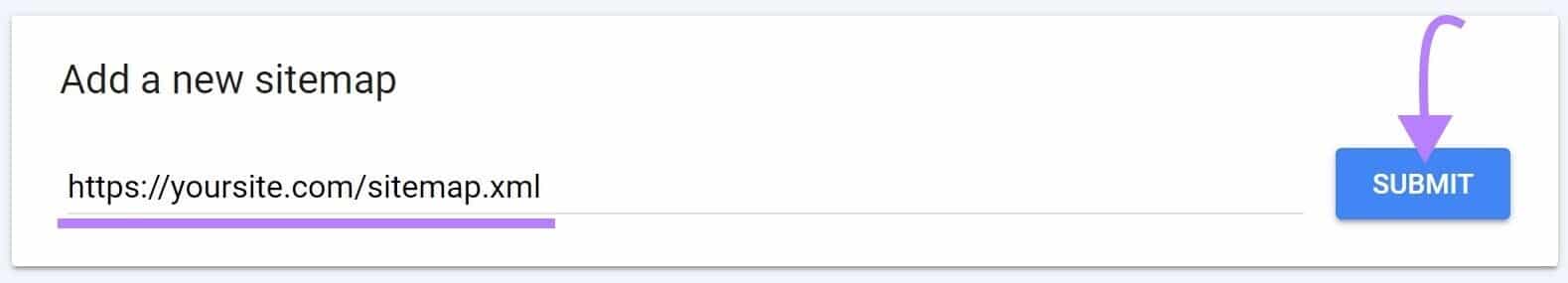

Lastly, submit your sitemap to Google in your Google Search Console account.

To do this, entry your account.

Click on “Sitemaps” within the left-hand menu. Then, enter your sitemap URL and click on “Submit.”

6. ‘Noindex’ Tags

A “noindex” meta robots tag instructs search engines like google and yahoo to not index a web page.

And the tag seems like this:

<meta title="robots" content material="noindex">

Though the noindex tag is meant to manage indexing, it might create crawlability points if you happen to go away it in your pages for a very long time.

Google treats long-term “noindex” tags as nofollow tags, as confirmed by Google’s John Mueller.

Over time, Google will cease crawling the hyperlinks on these pages altogether.

So, in case your pages aren’t getting crawled, long-term noindex tags might be the offender.

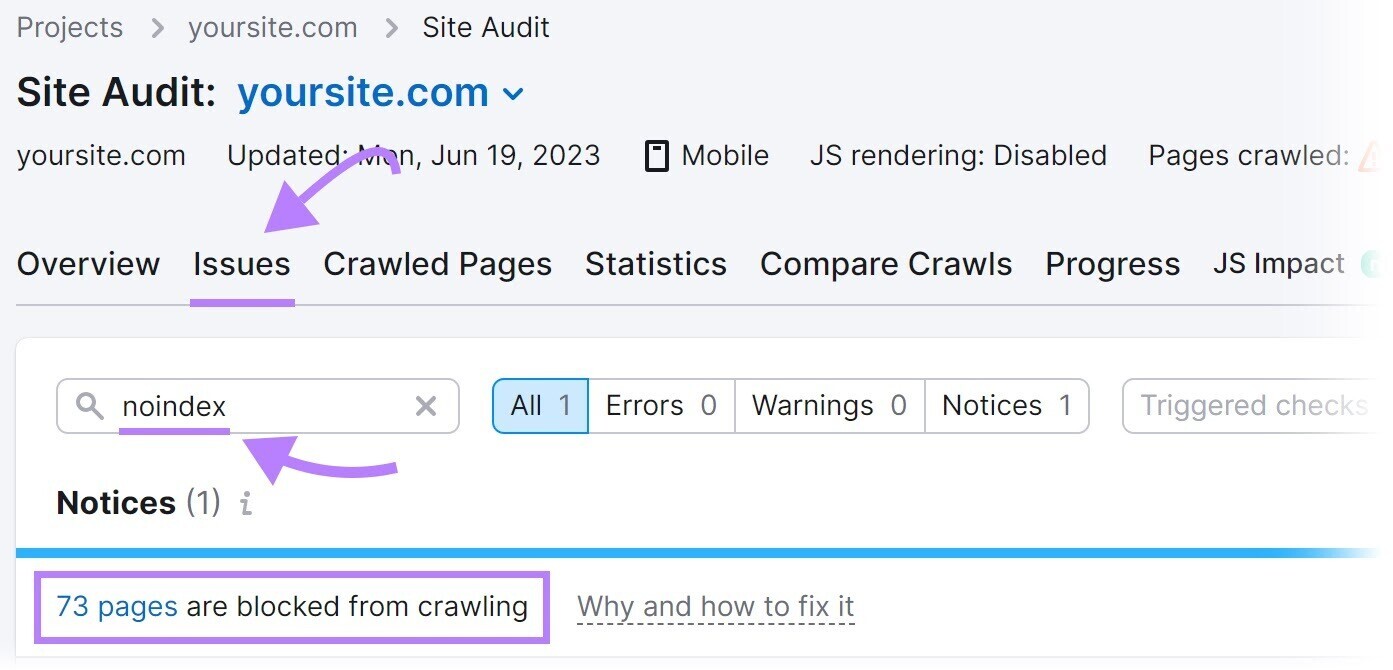

Determine these pages utilizing Semrush’s Website Audit instrument.

Arrange a mission within the instrument to run your first crawl.

As soon as it’s full, head over to the “Points” tab and seek for “noindex.”

The instrument will record pages in your web site with a “noindex” tag.

Overview these pages and take away the “noindex” tag the place applicable.

Word

Having a “noindex” tag on some pages like pay-per-click (PPC) touchdown pages and “thanks” pages is widespread follow to maintain them out of Google’s index. It’s solely an issue solely whenever you noindex pages meant to rank in search engines like google and yahoo.

7. Gradual Website Pace

When search engine bots go to your web site, they’ve restricted time and sources to dedicate to crawling—generally known as a crawl funds.

Gradual web site velocity means it takes longer for pages to load. And reduces the variety of pages bots can crawl inside that crawl session.

Which suggests essential pages might be excluded.

Work to unravel this drawback by enhancing your total web site efficiency and velocity.

Begin with our information to web page velocity optimization.

8. Inside Damaged Hyperlinks

Inside damaged hyperlinks are hyperlinks that time to lifeless pages in your web site.

They return a 404 error like this:

Damaged hyperlinks can have a major influence on web site crawlability. As a result of they forestall search engine bots from accessing the linked pages.

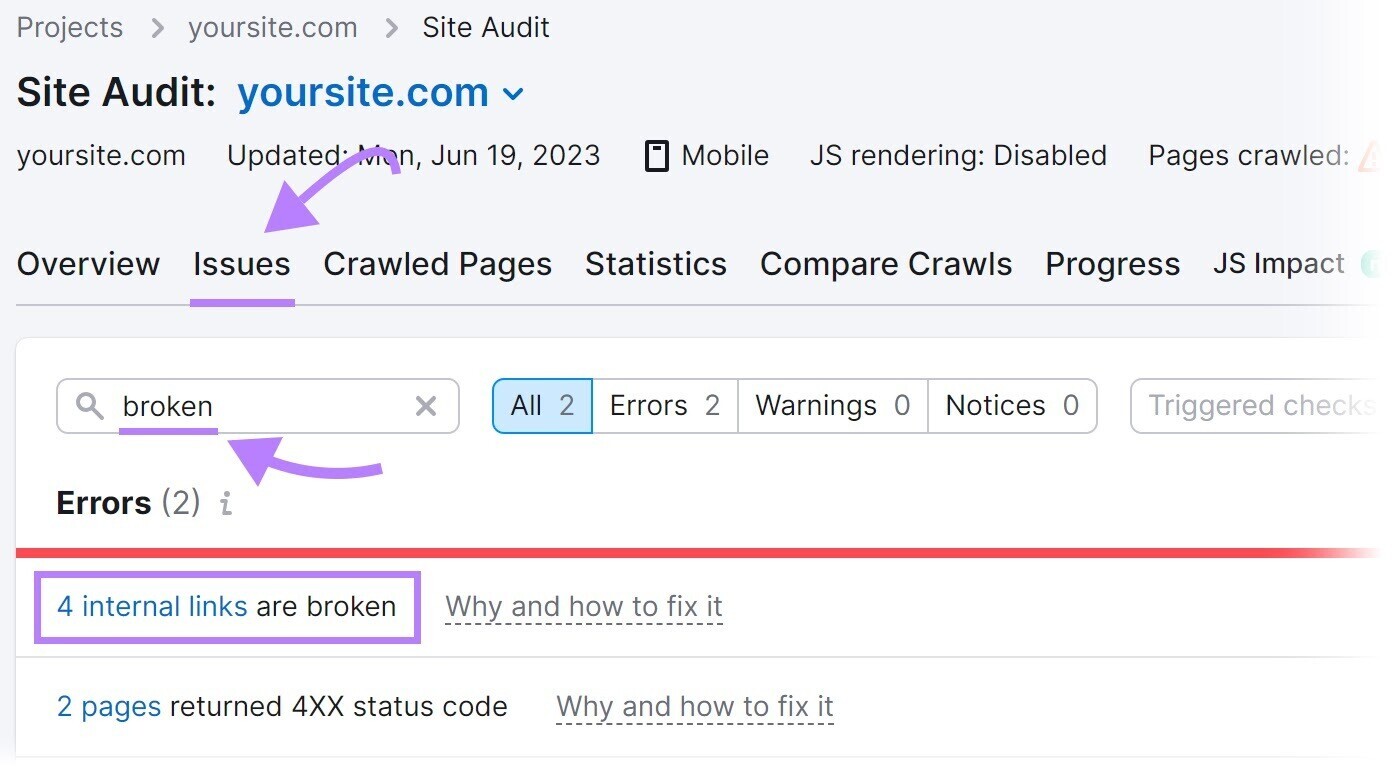

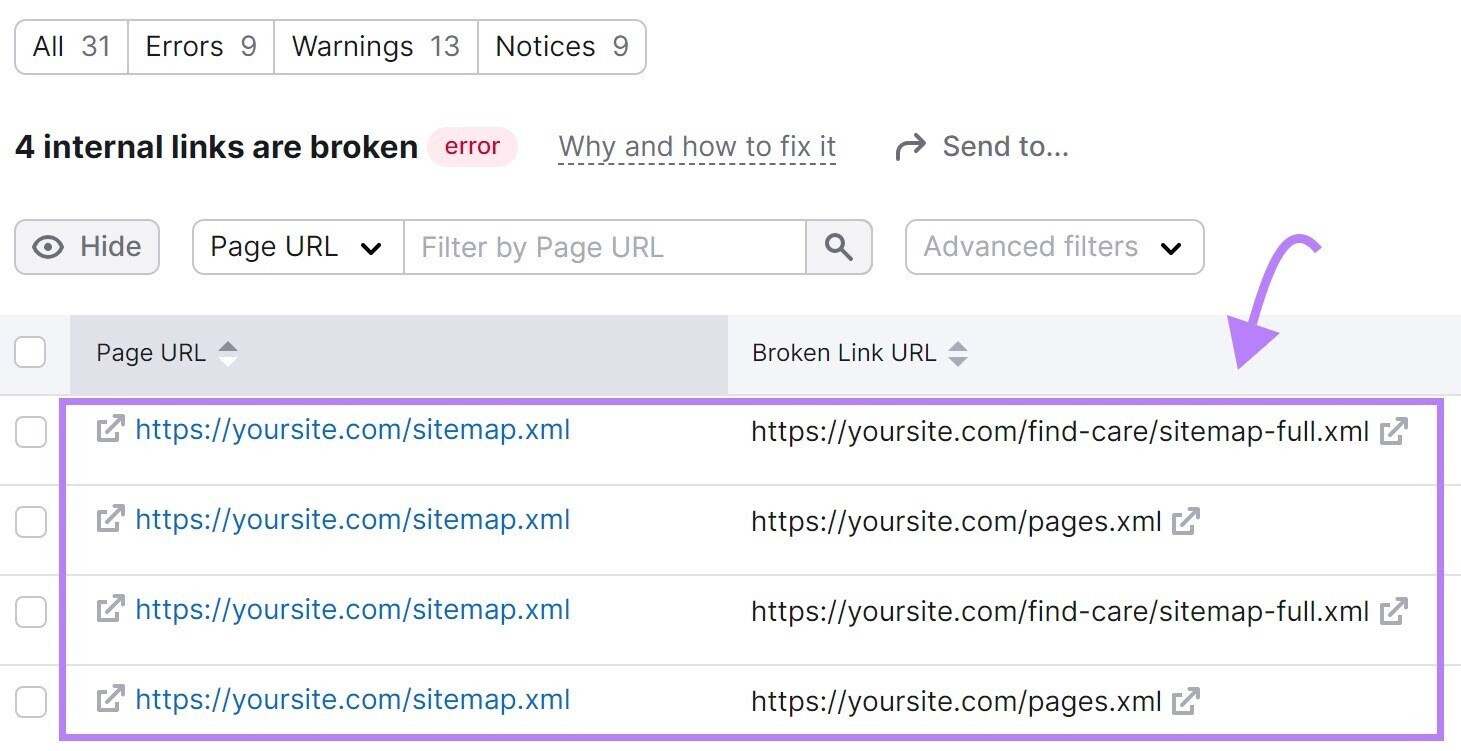

To seek out damaged hyperlinks in your web site, use the Website Audit instrument.

Navigate to the “Points” tab and seek for “damaged.”

Subsequent, click on “# inside hyperlinks are damaged.” And also you’ll see a report itemizing all of your damaged hyperlinks.

To repair these damaged hyperlinks, substitute a distinct hyperlink, restore the lacking web page, or add a 301 redirect to a different related web page in your web site.

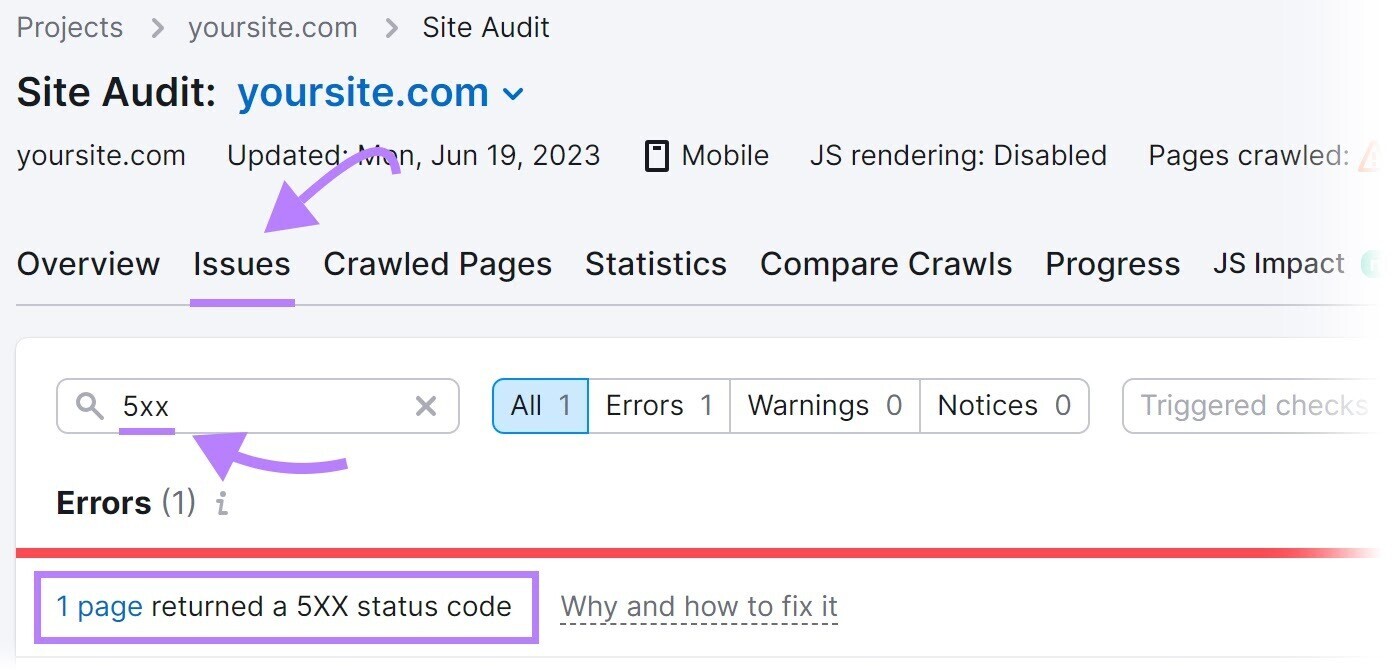

9. Server-Facet Errors

Server-side errors (like 500 HTTP standing codes) disrupt the crawling course of as a result of they imply the server could not fulfill the request. Which makes it tough for bots to crawl your web site’s content material.

Semrush’s Website Audit instrument might help you resolve for server-side errors.

Seek for “5xx” within the “Points” tab.

If errors are current, click on “# pages returned a 5XX standing code” to view a whole record of affected pages.

Then, ship this record to your developer to configure the server correctly.

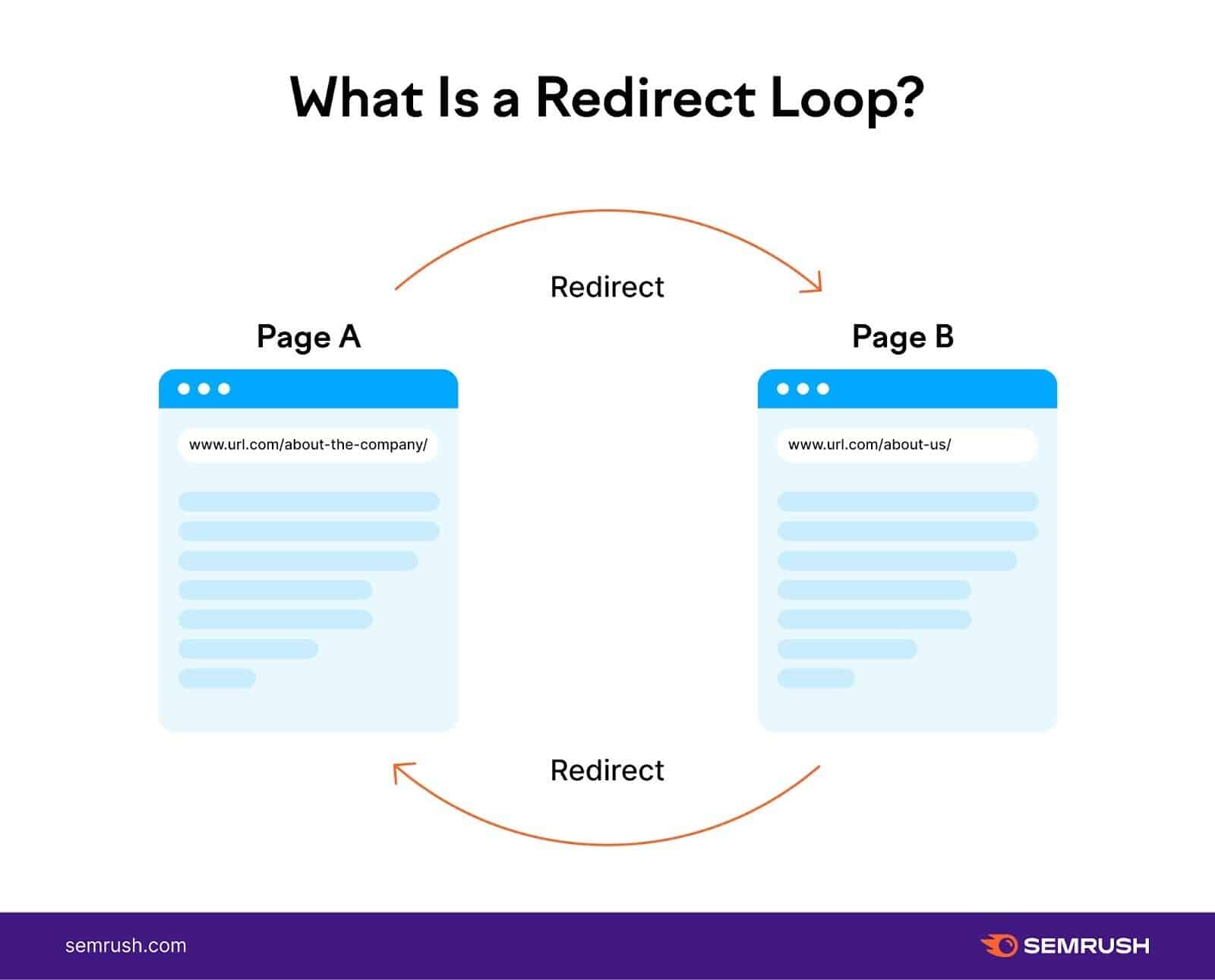

10. Redirect Loops

A redirect loop is when one web page redirects to a different, which then redirects again to the unique web page. And types a steady loop.

Redirect loops forestall search engine bots from reaching a ultimate vacation spot by trapping them in an countless cycle of redirects between two (or extra) pages. Which wastes essential crawl funds time that might be spent on essential pages.

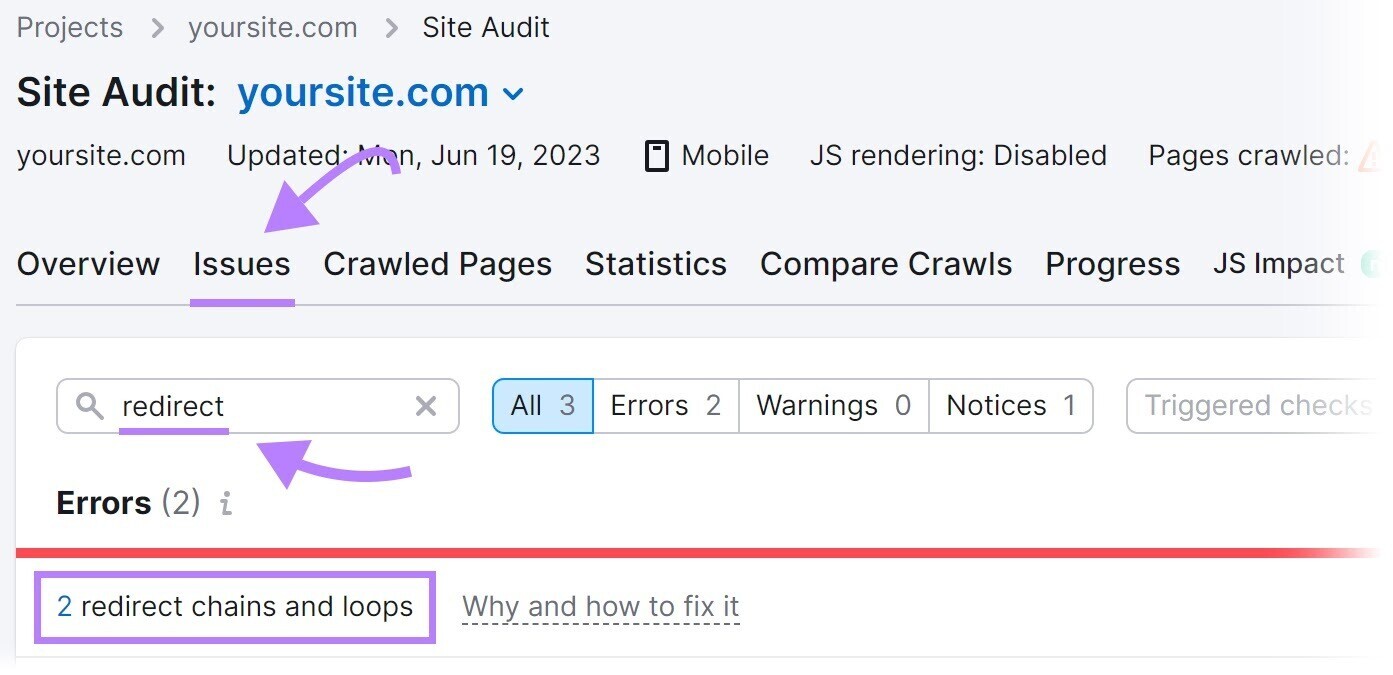

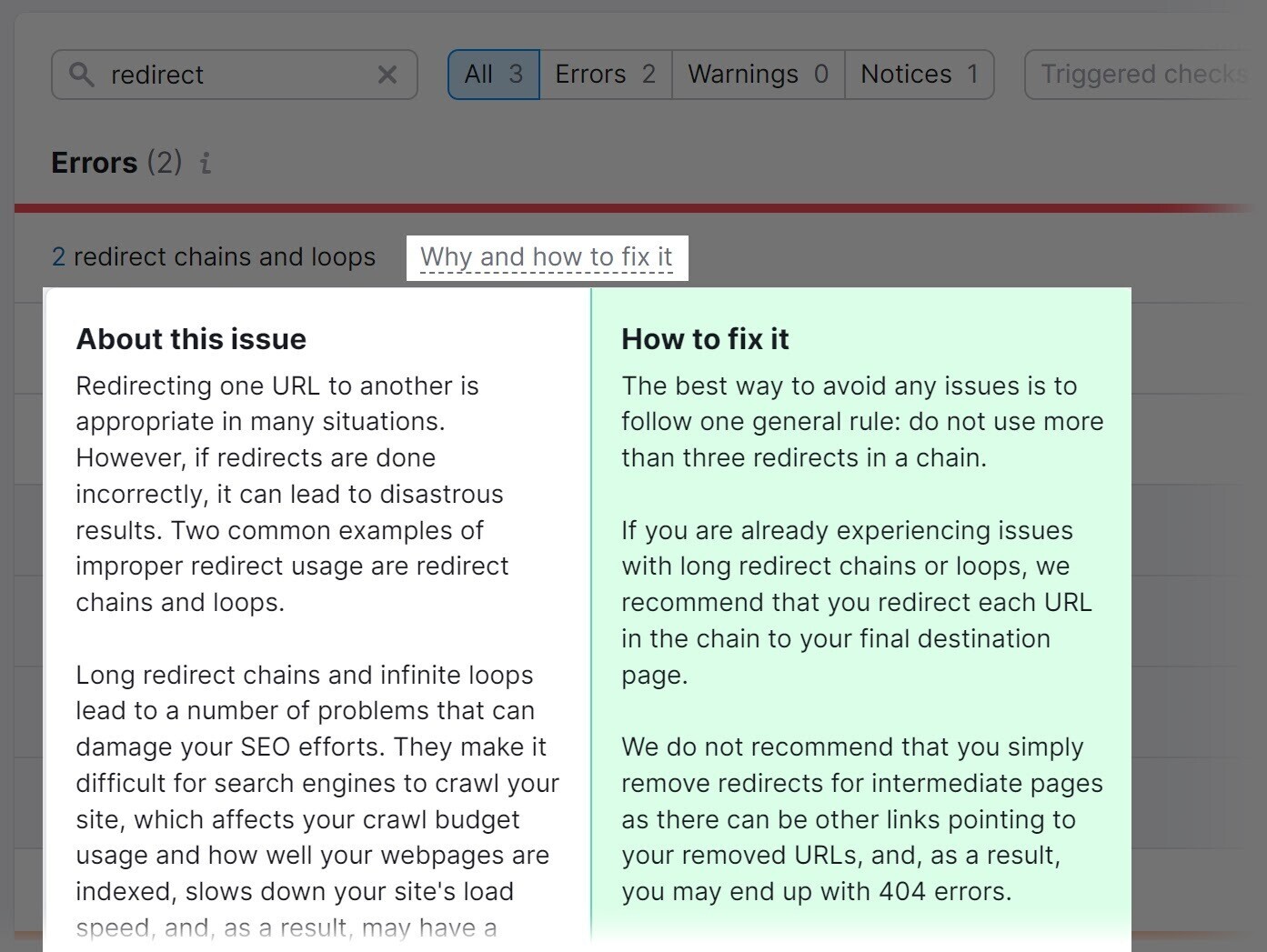

Remedy this by figuring out and fixing redirect loops in your web site with the Website Audit instrument.

Seek for “redirect” within the “Points” tab.

The instrument will show redirect loops. And provide recommendation on the best way to handle them whenever you click on “Why and the best way to repair it.”

11. Entry Restrictions

Pages with entry restrictions (like these behind login types or paywalls) can forestall search engine bots from crawling them.

In consequence, these pages might not seem in search outcomes, limiting their visibility to customers.

It is smart to have sure pages restricted.

For instance, membership-based web sites or subscription platforms typically have restricted pages which are accessible solely to paying members or registered customers.

This enables the positioning to supply unique content material, particular provides, or customized experiences. To create a way of worth and incentivize customers to subscribe or grow to be members.

But when important parts of your web site are restricted, that’s a crawlability mistake.

So, assess the necessity for restricted entry for every web page and hold them on pages that actually require them. Take away restrictions on those who don’t.

Word

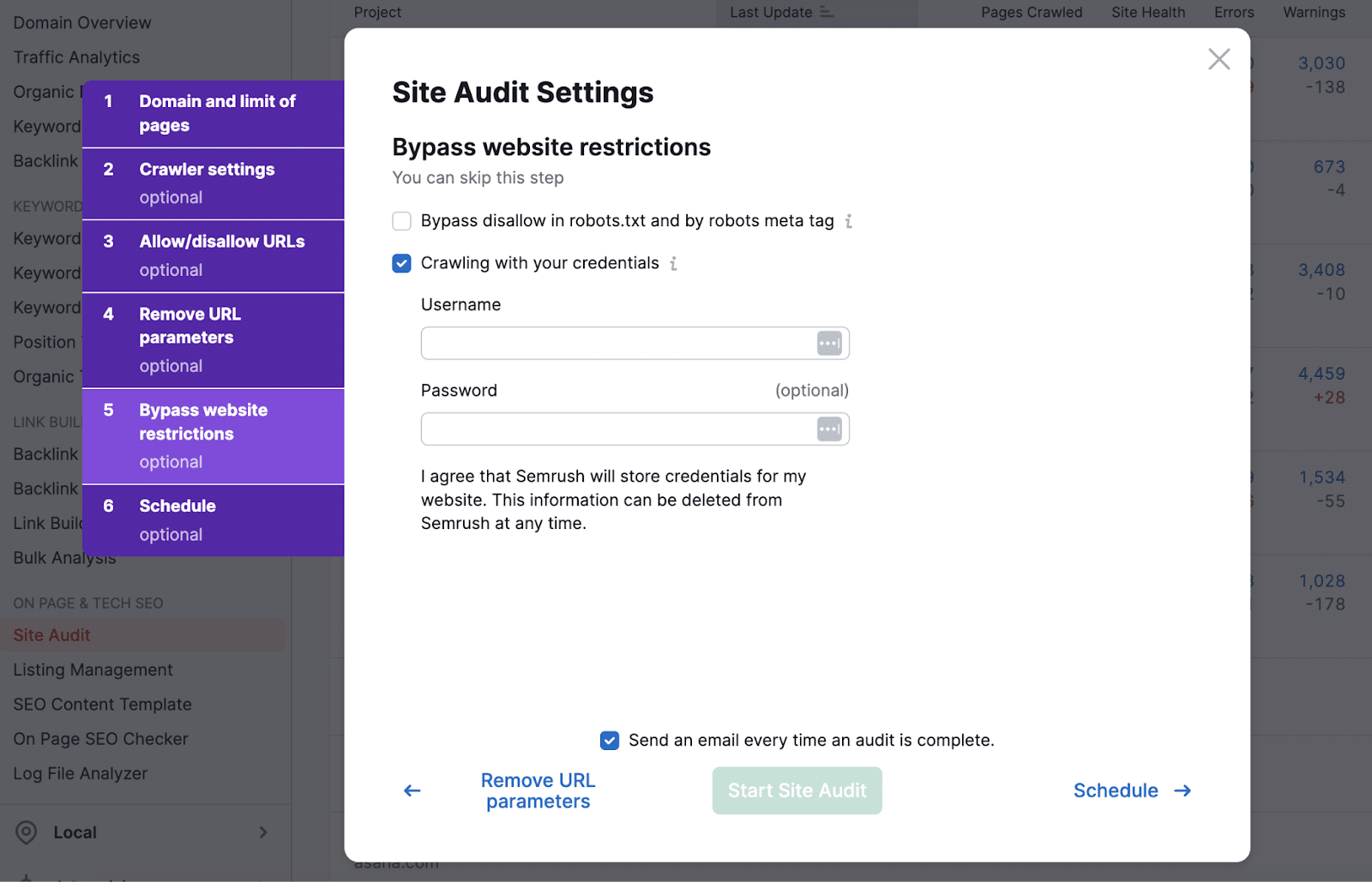

Should you ever wish to crawl pages with entry restrictions for different points, Website Audit makes it straightforward to bypass them.

12. URL Parameters

URL parameters (also referred to as question strings) are elements of a URL that assist with monitoring and group and observe a query mark (?). Like instance.com/footwear?coloration=blue

And so they can considerably influence your web site’s crawlability.

How?

URL parameters can create an nearly infinite variety of URL variations.

You’ve in all probability seen that on ecommerce class pages. While you apply filters (measurement, coloration, model, and so forth.), the URL typically adjustments to replicate these alternatives.

And in case your web site has a big catalog, all of a sudden you have got 1000’s and even hundreds of thousands of URLs throughout your web site.

In the event that they aren’t managed properly, Google will waste the crawl funds on the parameterized URLs. Which can end in a few of your different essential pages not being crawled.

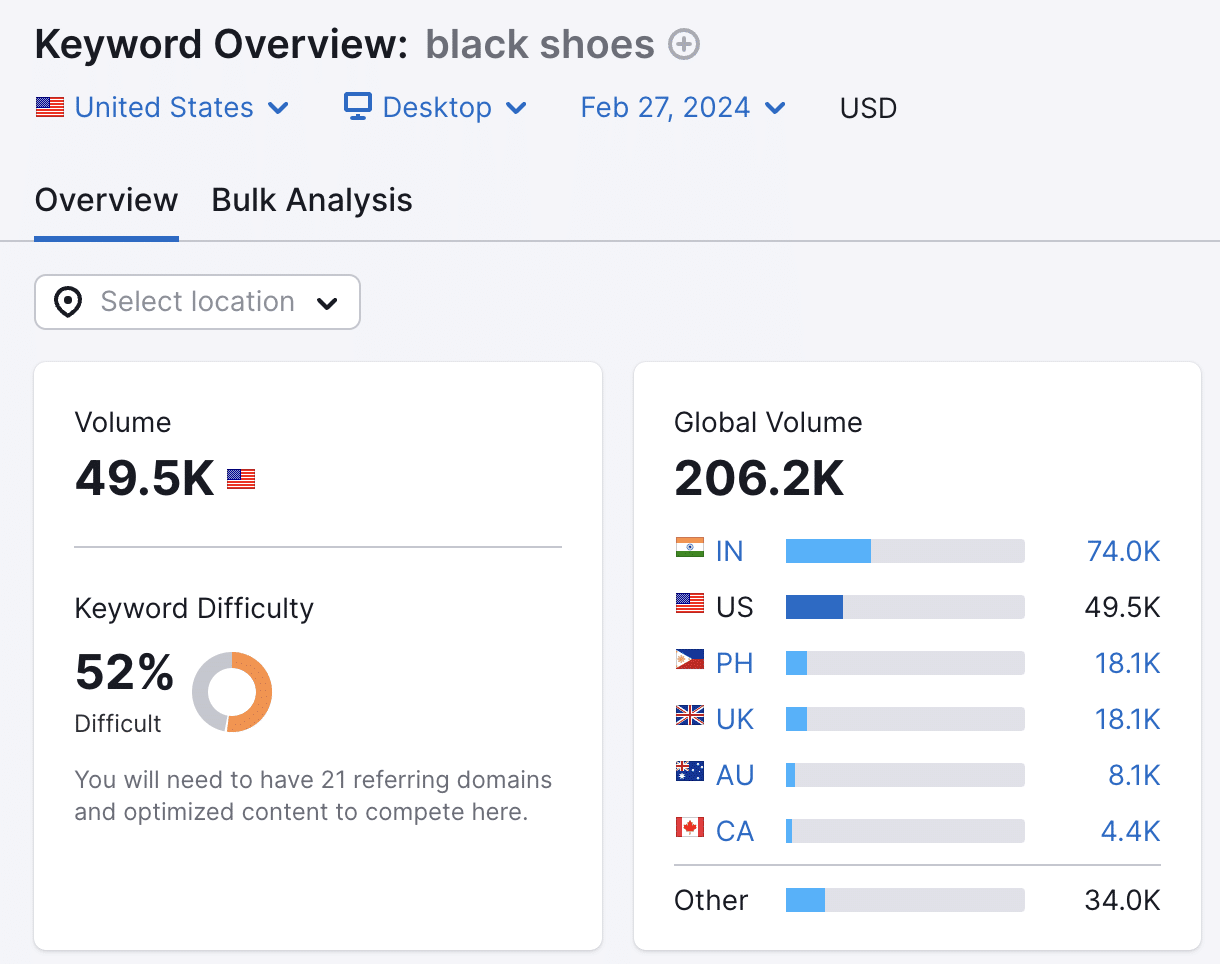

So, you could determine which URL parameters are useful for search and ought to be crawled. Which you are able to do by understanding whether or not individuals are trying to find the particular content material the web page generates when a parameter is utilized.

For instance, individuals typically like to go looking by the colour they’re on the lookout for when procuring on-line.

For instance, “black footwear.”

This implies the “coloration” parameter is useful. And a URL like instance.com/footwear?coloration=black ought to be crawled.

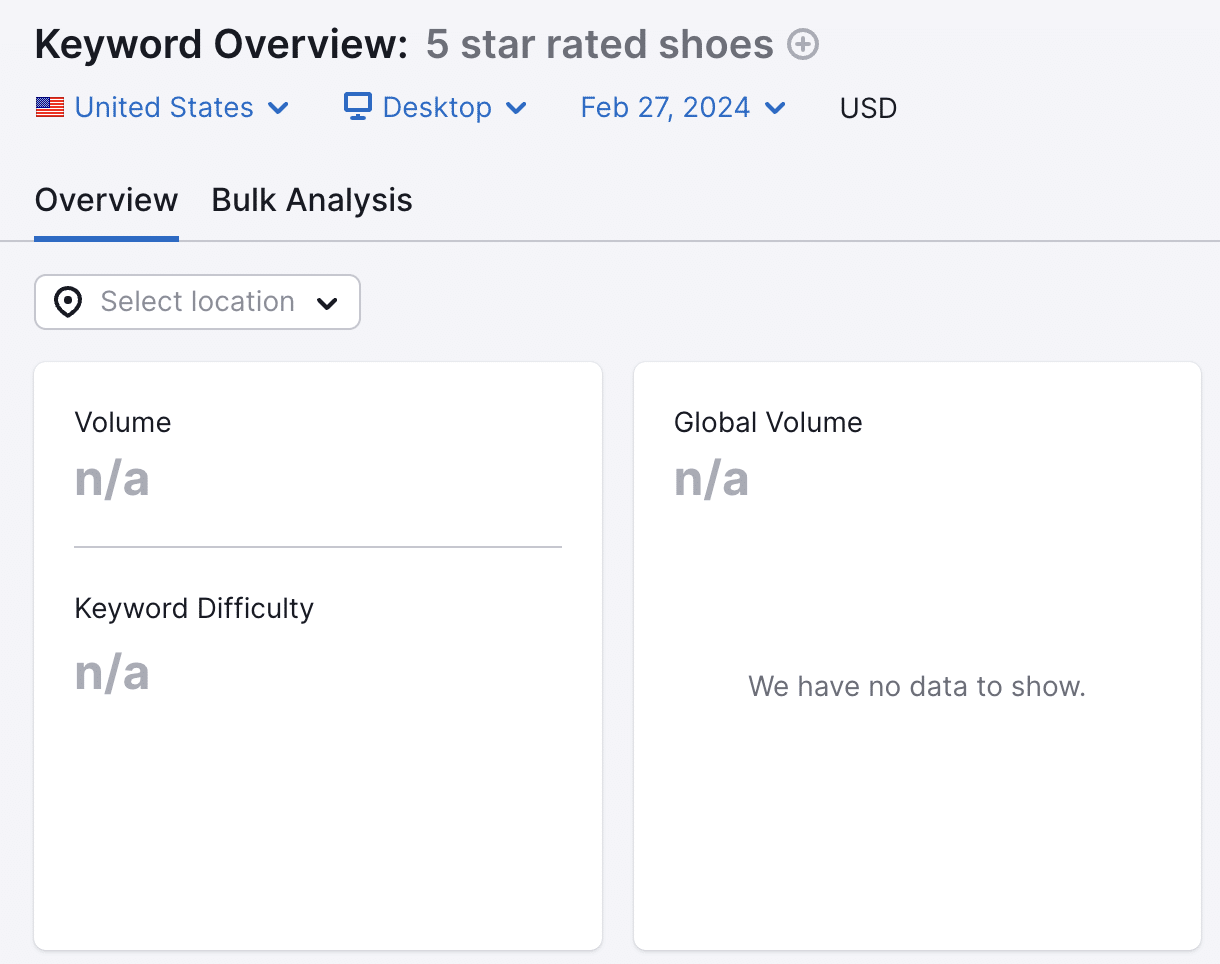

However some parameters aren’t useful for search and shouldn’t be crawled.

For instance, the “ranking” parameter that filters the merchandise by their buyer scores. Resembling instance.com/footwear?ranking=5.

Nearly no person searches for footwear by the shopper ranking.

Meaning you must forestall URLs that aren’t useful for search from being crawled. Both by utilizing a robots.txt file or utilizing the nofollow tag for inside hyperlinks to these parameterized URLs.

Doing so will guarantee your crawl funds is being spent effectively. And on the fitting pages.

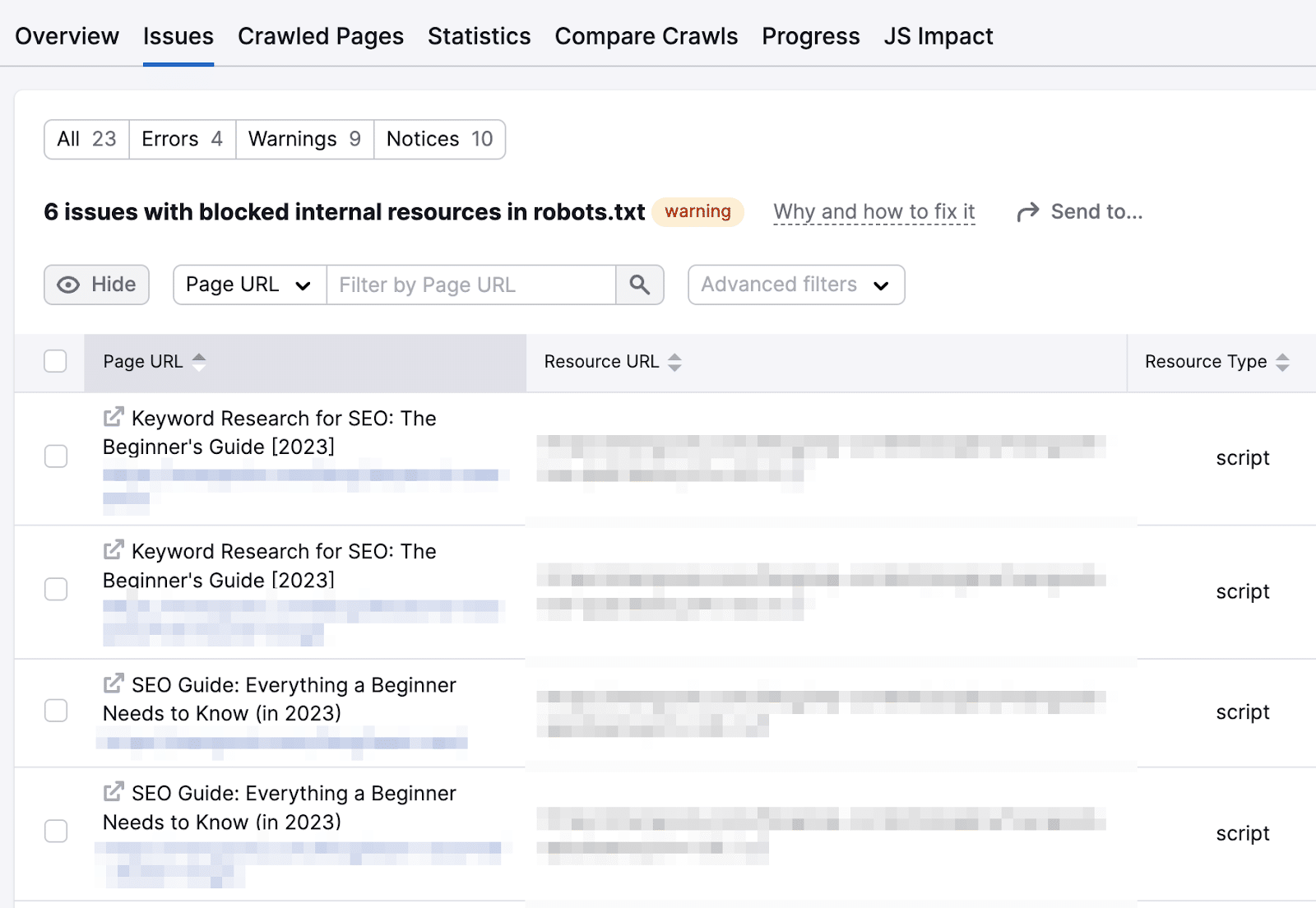

13. JavaScript Sources Blocked in Robots.txt

Many trendy web sites are constructed utilizing JavaScript (a well-liked programming language). And that code is contained in .js recordsdata.

However blocking entry to those .js recordsdata through robots.txt can inadvertently create crawlability points. Particularly if you happen to block important JavaScript recordsdata.

For instance, if you happen to block a JavaScript file that masses the primary content material of a web page, the crawlers might not be capable of see that content material.

So, evaluate your robots.txt file to make sure that you’re not blocking something essential.

Or use Semrush’s Website Audit instrument.

Go to the “Points” tab and seek for “blocked.”

If points are detected, click on on the blue hyperlinks.

And also you’ll see the precise sources which are blocked.

At this level, it’s greatest to get assist out of your developer.

They’ll let you know which JavaScript recordsdata are important to your web site’s performance and content material visibility. And shouldn’t be blocked.

14. Duplicate Content material

Duplicate content material refers to an identical or almost an identical content material that seems on a number of pages throughout your web site.

For instance, think about you publish a weblog publish in your web site. And that publish is accessible through a number of URLs:

- instance.com/weblog/your-post

- instance.com/information/your-post

- instance/articles/your-post

Despite the fact that the content material is similar, the URLs are completely different. And search engines like google and yahoo will purpose to crawl all of them.

This wastes crawl funds that might be higher spent on different essential pages in your web site. Use Semrush’s Website Audit to determine and get rid of these issues.

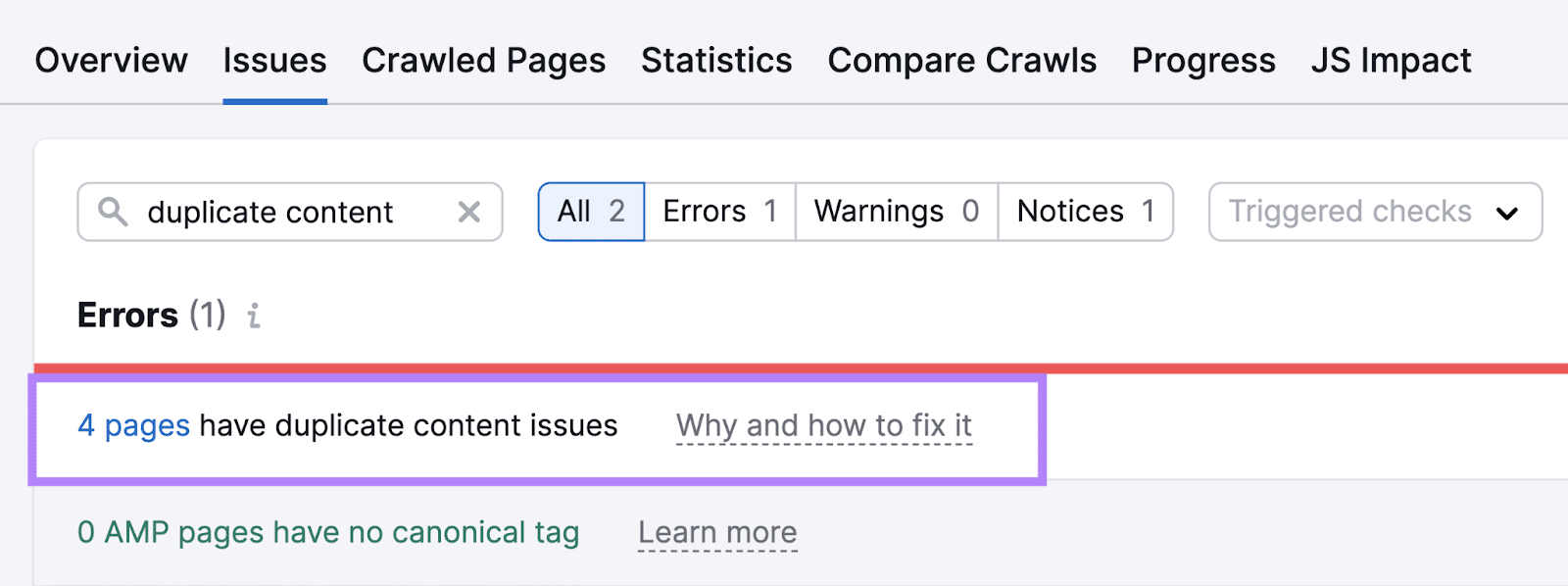

Go to the “Points” tab and seek for “duplicate content material.” And also you’ll see whether or not there are any errors detected.

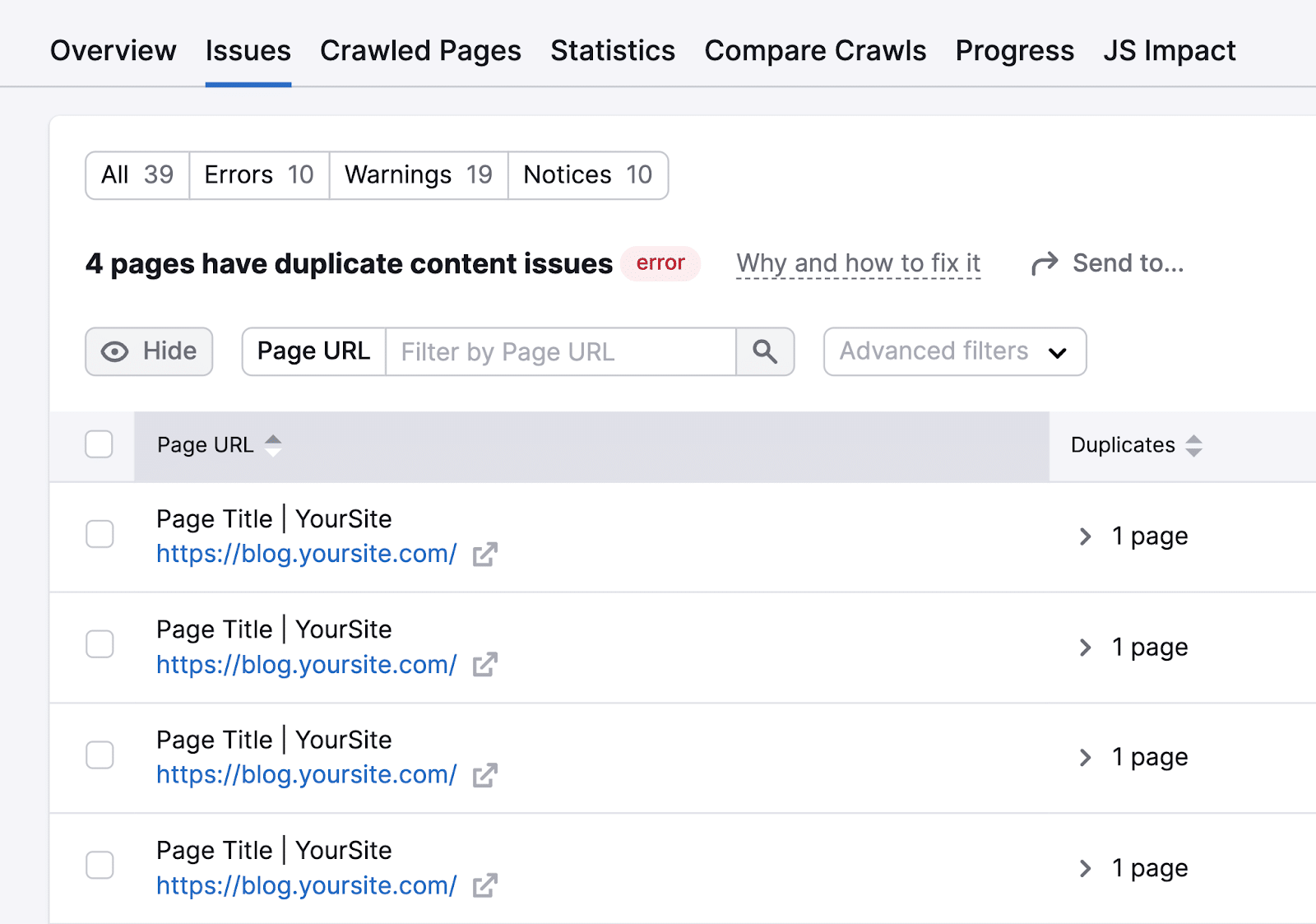

Click on the “# pages have duplicate content material points” hyperlink to see a listing of all of the affected pages.

If the duplicates are errors, redirect these pages to the primary URL that you simply wish to hold.

If the duplicates are essential (like if you happen to’ve deliberately positioned the identical content material in a number of sections to handle completely different audiences), you may implement canonical tags. Which assist search engines like google and yahoo determine the primary web page you wish to be listed.

15. Poor Cell Expertise

Google makes use of mobile-first indexing. This implies they take a look at the cellular model of your web site over the desktop model when crawling and indexing your web site.

In case your web site takes a very long time to load on cellular gadgets, it might have an effect on your crawlability. And Google might have to allocate extra time and sources to crawl your total web site.

Plus, in case your web site isn’t responsive—that means it doesn’t adapt to completely different display sizes or work as meant on cellular gadgets—Google might discover it tougher to know your content material and entry different pages.

So, evaluate your web site to see the way it works on cellular. And discover slow-loading pages in your web site with Semrush’s Website Audit instrument.

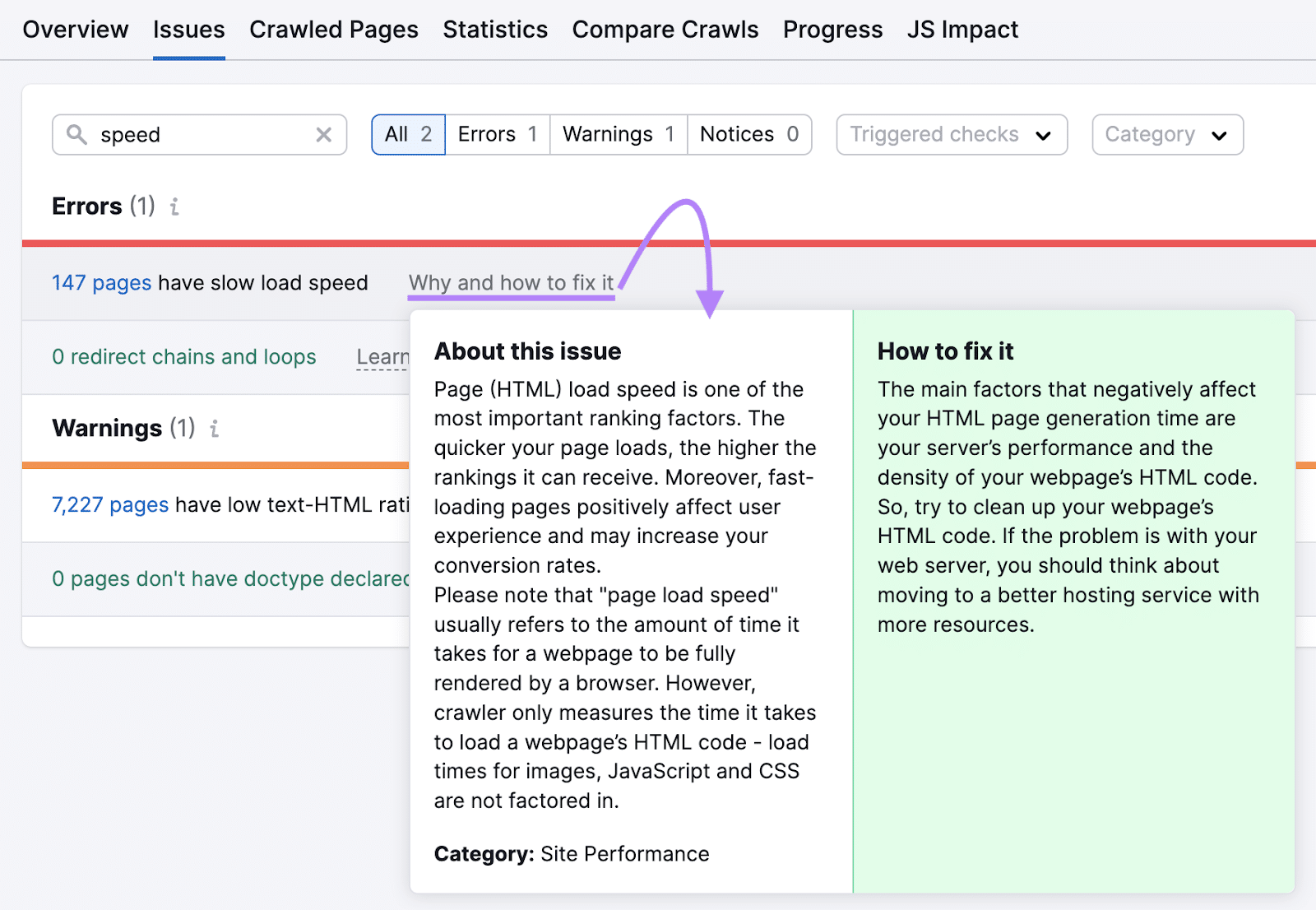

Navigate to the “Points” tab and seek for “velocity.”

The instrument will present the error when you have affected pages. And provide recommendation on the best way to enhance their velocity.

Keep Forward of Crawlability Points

Crawlability issues aren’t a one-time factor. Even if you happen to resolve them now, they could recur sooner or later. Particularly when you have a big web site that undergoes frequent adjustments.

That is why repeatedly monitoring your web site’s crawlability is so essential.

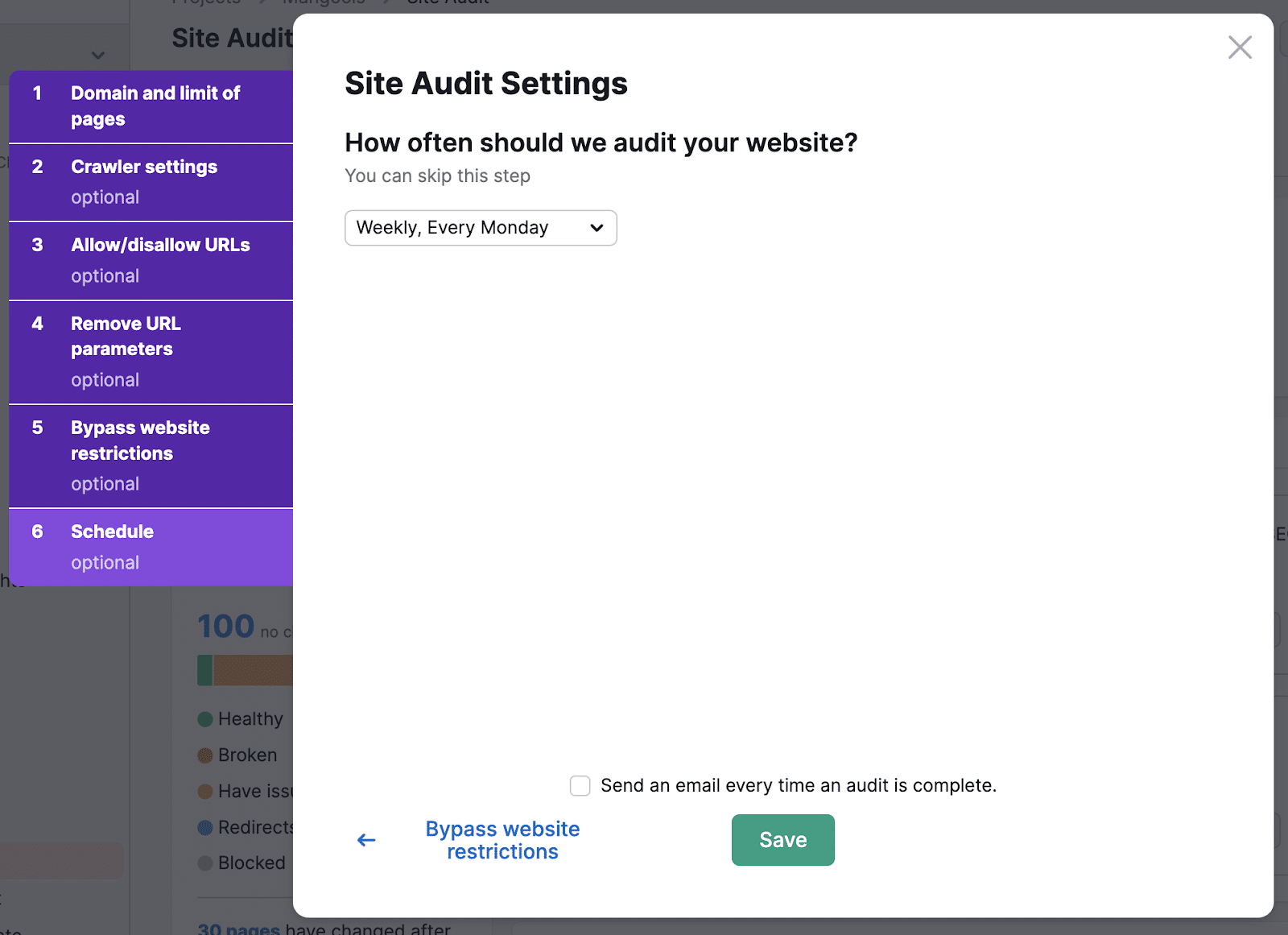

With our Website Audit instrument, you may carry out automated checks in your web site’s crawlability.

Simply navigate to the audit settings to your web site and activate weekly audits.

Now, you don’t have to fret about lacking any crawability points.